Regular readers of our blogs will be aware we have been vocal supporters of the Microsoft Cloud strategy and indeed introduced Microsoft Azure and 365 to our clients to replace on premise or ‘Early Cloud Multi-Tenanted Private Cloud’ solutions well before it became vogue to do so.

As we move into the era of ‘consumption’, IT vendors are seeking to simplify their systems and how they are customised. Indeed, Microsoft is one of the firms leading the charge of ‘consumption’ IT, developing a universe of different applications which can be integrated to provide a seamless end-user experience (that’s another story for another day).

In recent years we have also seen more adoption of the concept of application ‘platforms’ such as Microsoft Dynamics, SalesForce or SAP which can be used by software vendors to fast track the creation of custom applications. As we move into a true cloud world we fully expect these platforms to become more prevalent and many vendors are talking about their “cloud platform” plans.

Whilst there are some compelling reasons for this strategic approach there are also some real challenges that firms need to be aware of.

As users of Dynamics 365 for the last five years, we have, in our own way, experienced the ups and downs of platform IT.

Background

In the old world of software development, you would start with a database engine (normally SQL) and build a Client application around it. This approach was common, be it if you were developing a standard Windows package, a website application or a phone app.

In the new platform world software system providers can develop on top of a ‘middleware’ platform system that provides rich common functionality and seeks to simplify development and normalise interactions with the database. Furthermore, systems developed in this way enable customers to enhance core functionality by adding further customisations.

This provides a ‘layer cake’ approach to development strategy. The theory is the platform application provides a solid infrastructure, the application developer builds on this and provides a business application, and the end-customer can benefit by adding their own customisations to address their own unique business challenges without having to start from ground zero.

It makes compelling sense: rather than having to think of the basic functionality or deal with security challenges why not build on a platform provided by one of the world’s largest IT companies, that has the capabilities and resources to invest in a platform which is simply unmatchable by any provider to the legal sector?

There is also a drive to remove the need for traditional IT development skills with the introduction of a ‘no code’ or ‘low code’ tool set with the suggestion that whole systems can be developed by system users. Again, this is compelling for law firms who for years have struggled to get systems to operate in the way their fee earners want.

When products such as LexisOne, Peppermint and Fulcrum came to market I was really enthused to see the development as it is a compelling business strategy (expanded upon below) but as early adopters of those systems know, the delivery has not lived up to the strategic aims.

The Platform Challenge

The first widely adopted solutions based on such platforms introduced to the UK legal market were Peppermint and SA Global’s Evergreen (previously known as Lexis Nexis LexisOne), both based on Dynamics, and Fulcrum GT based on SAP.

As mentioned above one of the huge advantages of platform development is that you are able to leverage the functionality and investment created by global ‘superstate’ IT vendors. However, this is also one of the major risks.

Put simply, no vendor who creates solutions based on platform applications can control the development roadmap of the global vendors. Taking Dynamics as an example Microsoft has released a new version of Dynamics every two to three years.

| Dynamics CRM 2011 | Feb 2011 |

| Dynamics CRM 2013 | July 2013 |

| Dynamics CRM 2016 | November 2015 |

| Dynamics 365 | October 2018 |

In the old world of development based on a SQL server, even major updates ensured backward compatibility for applications developed on them; but platform updates have, so far, been more disruptive and have required significant redevelopment or testing of applications built on them.

These upgrades are more akin to the change between major Windows versions (e.g. Windows XP to Windows 7 to Windows 8 to Windows 10). All applications used are significantly impacted by the changes to the platform on which they run: while most things operate okay there may be some real showstoppers or the system does not operate as efficiently as it should.

On the good news front, Microsoft is currently stating that Dynamics 365 will be the last version of the Dynamics platform and from this point on it will be an ‘evergreen’ platform (hence the name chosen by SA Global), always changing and adapting but providing certainty and stability for solutions developed on the platform.

However, things change. Windows 10 was meant to be the last ever version of Windows but in the last week there has been a report that Microsoft is due to release Windows 11 in late June so it is highly likely at some point Microsoft will announce a fundamental change to the Dynamics platform.

The introduction of platform computing has also introduced a new word – ‘deprecation’ – which gives IT professionals a shiver down the spine. This lovely little word means the vendor has decided to turn off functionality or they are changing the way functionality works. Whilst on some occasions it’s something relatively minor such as a change of screen, on others such deprecation can have a significant impact on how the system is used or developed.

Development Tools

A good example of platform deprecation causing significant impact relates to the tools used for development. When Dynamics 365 was first launched developers needed to develop using tools internal to Dynamics such as using ‘Dynamics Processes to create processes, dialogs and screens.

Over the last year Microsoft has changed tack and pushed developers to use Microsoft PowerAutomate instead and will be depreciating the internal Dynamics development tools. There is, however, no method to move existing Dynamics workflows to Microsoft PowerAutomate so there is a need for customers to completely redevelop any work done using the new tools.

That is introducing a significant overhead for no immediate business gain. But there is logic to this strategy. As Microsoft builds upon its cloud-based ecosystem, Microsoft 365, there is a need to standardise the core technologies that underpin the applications within this product set. By forcing a move to PowerAutomate for Dynamics workflow, Microsoft has created a mechanism that can be used to manage workflow consistently across a variety of products. Therefore Dynamics, SharePoint, PowerApps and Machine Learning/AI models all use a consistent approach to workflow tooling.

Microsoft has also already depreciated elements within the Microsoft PowerAutomate toolset. To connect to a Dynamics system within MS PowerAutomate you used to use a method called ‘Dynamics 365 Connector’ which used the Common Data Services. However, Microsoft has now introduced the Microsoft Dataverse concept (someone is obviously into their DC or Marvel) and it is now necessary to re-do all Flows to use this new connection middleware.

Even between the writing of this article and its publication MS have changed the types of trigger from “Create or Update” to “Add or Change”

This may seem like small beer and low-level detail but it is a significant headache for IT teams.

In the five years we have used Dynamics I have had to learn three different development toolsets and redevelop our customisations four times (once per toolset and once for a change of connector) . It is a significant undertaking to do this even for our little business and has no immediate business value at all.

Just imagine how that would go down with a Partner in a busy law firm when you find all of your customisations need to be redone for zero business advantage (in the short term). For developers its like doing thousands of lines to see them ripped up in front of you and told you need to do them again in blue rather than black ink.

Tectonic Plates

Our experience so far is this situation results in a jarring existence as the three different levels of application try to align with each other. The application provider is consistently having to keep up to speed with the changes the platform provider is making to the system and ensure they change their system to accommodate them.

The end-client needs to ensure they keep their customisations in line with both the application providers’ and platform providers’ changes.

Some firms who have taken on the early platform PMSs have described living in a constant state of change which has resulted in them effectively implementing several PMS solutions rather than the one they were expecting.

Furthermore, months of development effort has been wasted as it has had to be redone to accommodate the change in toolsets.

Evolution has its Advantages

As frustrating as this is for developers, it does follow a certain logic when we view this change from a strategic technology viewpoint. Technology never stops still and for Microsoft to ensure it provides functionality that is relevant for customers, it cannot afford to rest on its laurels. It does contrast to other non-Microsoft based PMS technology that in some cases have their systems running on legacy technology that can become problematic due to its age.

The Low Code/No Code Challenge

It has long been a challenge for PMS/CMS providers to create a development toolset that allows their system to be customised by a fee earner rather than requiring a developer.

Regardless of the sales pitch of ‘anyone can customise’, it is often the case that system customisers require skills in advanced logic, data manipulation and databases. Equally, given the different histories of the PMS/CMS vendors, each system has its own toolset which requires significant training to become familiar with and fluent in.

The truth, therefore, is there are very few law firms who were able to sustain customising a PMS/CMS without employing IT professionals.

A modern ‘no code/low code’ system aims to enable power users to develop advanced applications by using wizards, drag and drop widgets or screen design and picking options from drop-down suggestions. The database structure and logic decisions will be masked from the developer using these technologies. Rather than using ‘geeky’ field names developers will choose from English descriptions.

The move to platforms with true ‘no code/low code’, using industry-standard tools, is therefore very compelling for law firms and I am certain we will see a move to this approach.

Our own experience with MS PowerAutomate is that it has great potential and, so long as Microsoft persists with its development and support, it will become a good tool.

However, as it stands at the moment, it is nowhere near ready to be a tool which can be put in the hands of non-developers. Equally for an old school developer like myself, (okay, so its been nearly twenty years since I really developed but I can still bash out a mean SQL statement and VBA) the solution is cumbersome and what you could have done in two or three lines in a SQL trigger or stored procedure or VB.Net now means a lot of different steps.

To explain this it’s best to show it. What follows is an extremely simple MS PowerAutomate flow. It is triggered whenever a Dynamics Opportunity is added to the system or reopened and simply posts a message into our internal Teams general chat channel.

Step 1: Create a new Flow which is triggered when a record is added or updated.

MS PowerAutomate will then open with a Microsoft Dataverse ‘trigger’ action box which lets you configure under what circumstances does the flow run.

Table Name drop down determines which table is been watched. This is the first complexity, for some tables such as our example this is obvious but other times tables in this list do not match what you see in the front end. To be certain you will need to check in the development environment or documentation.

There also seems to be some inconsistencies in how Table names are represented. For example, many ‘entities’ in Dynamics are renamed to make them make sense to the application or business. As such the Legal PMS have renamed ‘Account’ to ‘Client’, ‘Case’ to ‘Matter’.

However, in MS PowerAutomate sometimes the table names used are the name which Microsoft determines and sometimes the renamed version you see in the Dynamics development tools are used.

Column Filter determines if particular fields need to change. However, there is no selection box and these need to be typed in a comma separated list.

The name used needs to be the schema name and not the English label name used. To find this you will need to check in the development environment or documentation.

Given the vagaries of Dynamics naming conventions I often find it is necessary to open the screen designer and check the name from the properties of the desired field shown on the screen.

Should you mistype the fieldname you will not be told of any issue until the flow fails to run.

Row Filter makes use of ODATA language to determine if only certain data triggers the flow. ODATA is a very complex structure

For example below is what is need to find records with dates earlier or equal to today.

@lessOrEquals(formatDateTime(item()?[‘Start_x0020_Date’], ‘yyyy-MM-dd’), formatDateTime(utcNow(), ‘yyyy-MM-dd’))

Warning! One of the most annoying things I found was the lack of ‘follow through’ of actions on subsequent steps.

For example, it is possible to rename the trigger block so rather than it been referred to as the default ‘When a row is added, modified or deleted’ you can call it something relevant such as ‘New Opportunity’.

However, if you rename the block and have already built additional steps which reference it all of those steps will need to be redone as the rename will break the link.

This is because when you select fields in subsequent steps MS PowerAutomate creates a code behind the scene such as :-

@{outputs(‘User‘)?[‘body/fullname‘]}

‘User’ – is the name of the ‘block’ and ‘fullname’ is the fieldname.

If you rename the block after selecting a field on a step this behind the scene code is not updated.

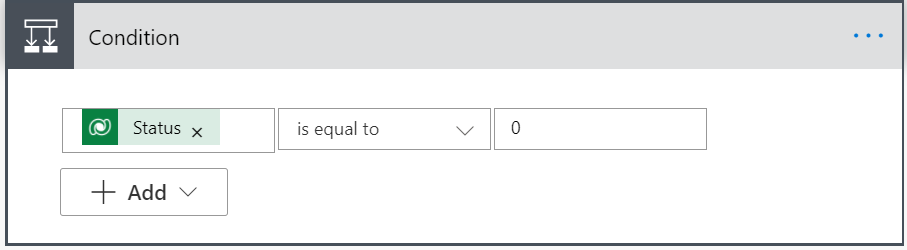

Step 2: Check to ensure the Engagement is Open

This requires a filter to checks to see if the Status field is = 0 (which in Dynamics is open).

Yes, we could have done this is as an ODATA filter mentioned above but after several failed attempts at the format right I lost the will to live and added as a step.

At least in this condition box I get to choose the field name from a handy little popup box rather than having to type it in.

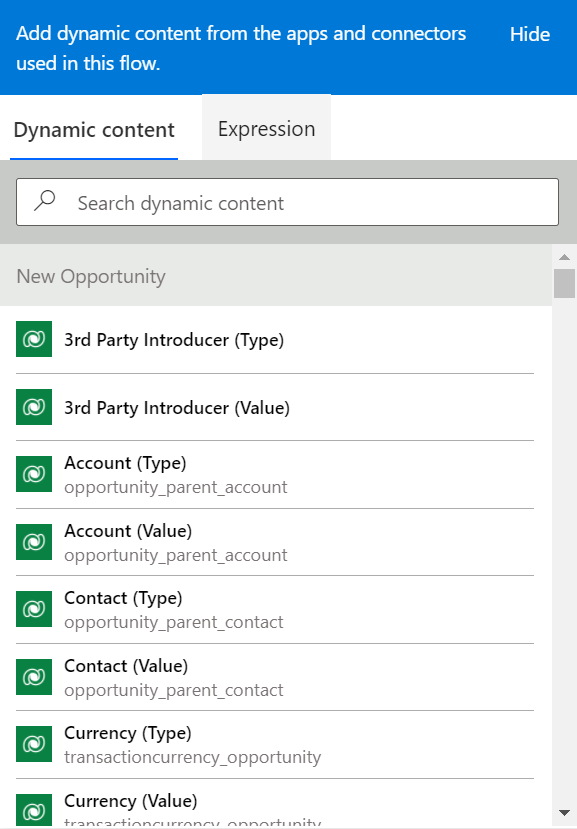

However, even this method is not easy. Later on we will want to find the ‘Account’ the ‘Opportunity’ is linked to. The popup gives use four options.

We need ‘Account Value’ which takes us down to two. The only way to find out which of the option we need to use is to look at the field properties on the Dynamics screen designer. (Which means you need Customisation permissions for Dynamics as well as MS Flow)

This show both the Display Name of the field and description (Note frustratingly the real name of the field which is needed for filters etc is not shown)

Step 3: Update the Fields.

The next blocks are, for an ex-developer are extremely inefficient and for a low code business customiser just mind blowing.

In our simple message we just want to say Who the Introducing Consultant is, the name of the Client Contact and the name of the Client.

Rather than normalising these fields and assuming you may want to show things such as ‘User name’ or ‘Firm Name’. MS Power Automate just returns a unique record identifier for a related record in a linked table.

It is therefore necessary to undertake a look-up on each of these fields in order to get to the fields we need from the linked-table.

Should any of these fields be blank you would need to make use of ‘conditions’ to jump over the look-up step. In our scenario you have 9 different possibilities you would have to manage, which given how we build the message becomes a real pain and it would be necessary to use temporary variables to manage round the problem.

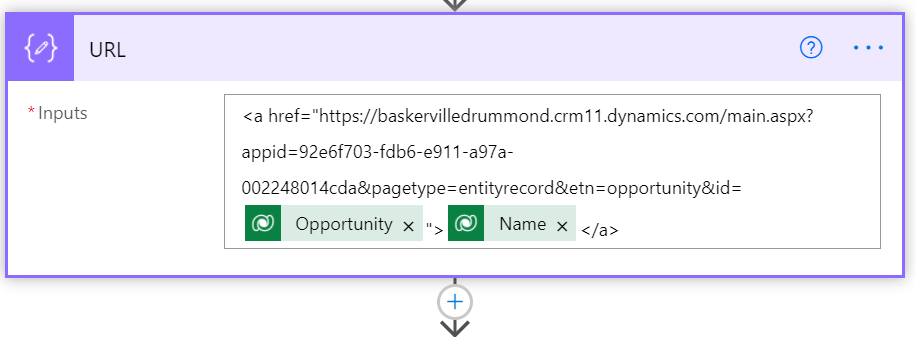

Step 4: Calculate the Dynamics Opportunity URL

We also want to include a URL hyperlink to the new Opportunity. This is a standard thing to include to any message so the receiver can just click to open the record in Dynamics.

However, MS PowerAutomate and Microsoft Dataverse connection do not cater for this. The old depreciated Common Data Services did have the functionality and this is a good example of the frustrations of depreciation as vendors rush out newer things to use without fully bringing over the functionality of what they are disposing of.

So, currently, we have to build a URL from scratch. In our example I have cheated and hard-coded the Dynamics location and Custom App ID. This short-cut could cause us significant issues if we change either of those and my approach is against all good development practice, which should be to find these from the system environment.

By this point I’d got frustrated and went for the quick-win which I expect many people will do, which again is an example of business risk

Step 5: Send Message to Teams Chat Channel

We are finally in the position to send a message to our MS Teams Channel.

This is the simplest step so far, it is simply a case of completing the drop downs and typing a message and using our ‘Dynamic Content’ selector pick the fields we want to include on the message.

Again there will be duplicates in this list but using the name of the ‘blocks’ it does become easier to determine which field you want to display.

Written by…

David Baskerville

So What?

As I said earlier in this article I think the era of ‘consumption’ and platform compute has arrived and is here to stay. There are some compelling advantages but they need to be balanced by the ongoing change and skills required to manage these types of environments.

Salespeople will tell you of the ease of platform compute, the advantages of ‘Low Code/ No Code’ development. I am sure these tools will improve over time but as things stand MS PowerAutomate is not in a state where you should expect the miracles you will see in the press. Whilst it is undoubtedly the answer to some things, it is not the answer to everything.

From an old developer, who is essentially a business user these days, it is the most illogical and frustrating tool I have ever had to use.